Home > Securing Packages in npm, Homebrew, PyPI, Maven Central, and RubyGems

2025 Aug

This talk was about rolling out package signing and build provenance across open source package repositories. It was given at USENIX Security's Enigma track.

We're going to talk about a new security capability that has been rolling out to open source package repositories, with a few important caveats. The first important caveat is that this work was done by dozens of people across several organizations. I helped with this work on npm, which is operated by GitHub, but other repositories are run by other companies or non-profit foundations.

This work was coordinated through the OpenSSF's Securing Software Repositories working group, where the folks who operate these repositories can talk about what's working, what's not, and exchange implementation guidance.

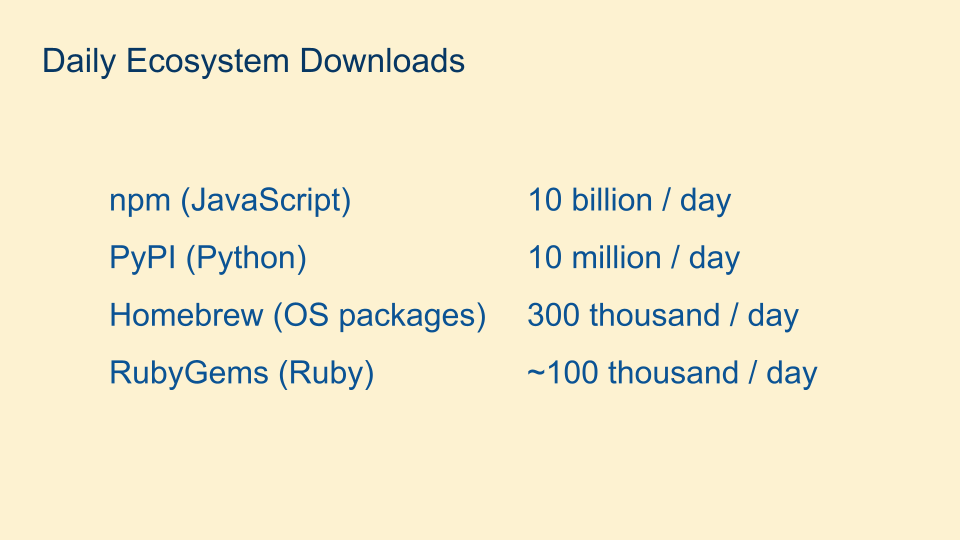

It's hard to overstate how important these repositories are to software development. They literally serve billions of downloads per day to people who are developing software of their own, or applications meant to be run as-is (particularly for Homebrew but for other ecosystems as well).

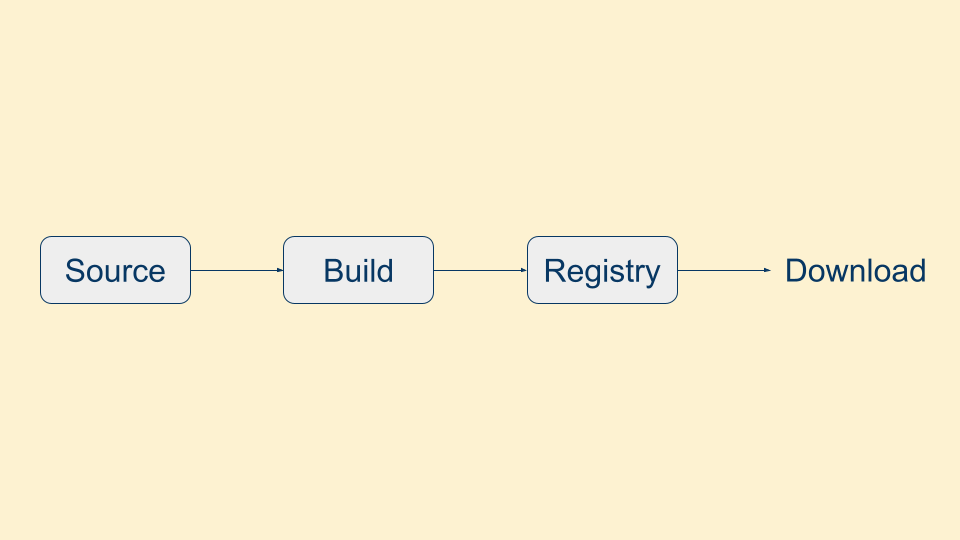

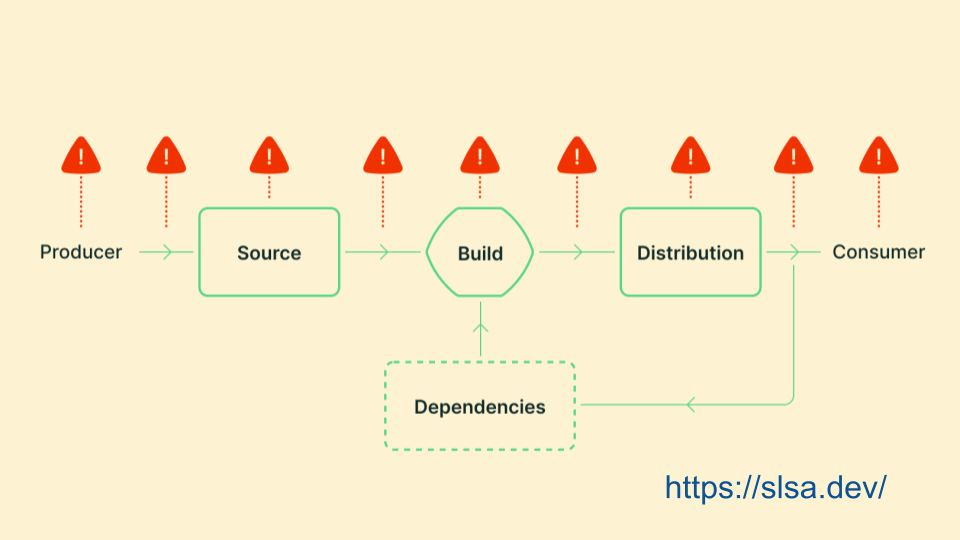

But what has to happen before you can download a package from these repositories? First the code has to be written, and because it's open source usually there's a repository of the source code hosted somewhere publicly. Then the code goes through some sort of build process to produce a package. This could take place on a cloud build system like GitHub Actions or GitLab Pipelines, or it could be done on a developer's computer. Then the package is uploaded to the registry (another name for the package repository), where people can finally download the package and use it.

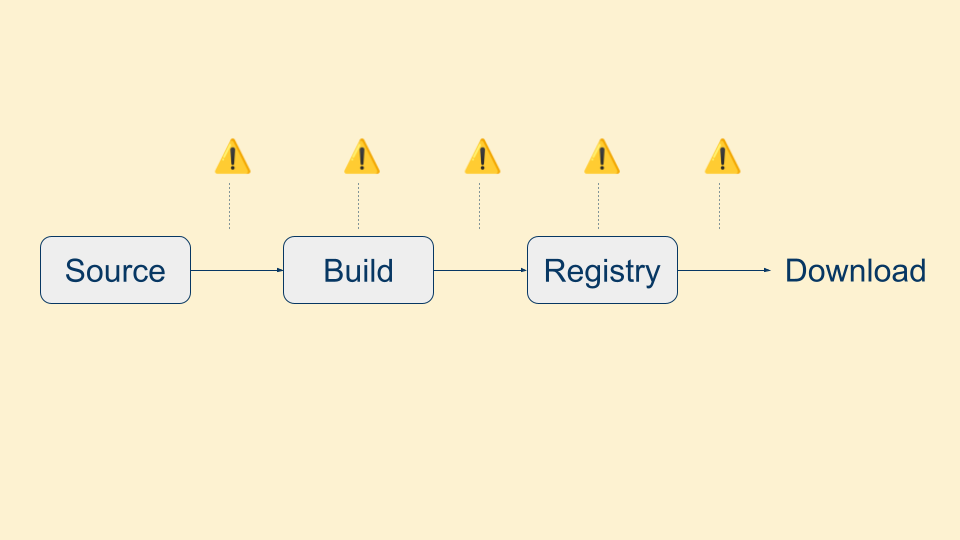

Unfortunately, because of the popularity of these registries, there are a number of "points of interest" for attackers to try to get malicious code in the packages. They could try to hide malicious code somewhere in the repository, or build from a copy of the repository that has had malicious code added. They could try to tamper with the build process, which often isn't monitored as closely as the source code. They could try to tamper with the package in transit, either after the build process before it's uploaded to the registry or as it's being downloaded from the repository by the end user.

Or if you're feeling particularly bold, you could attack the package repository directly and try to tamper with the stored package.

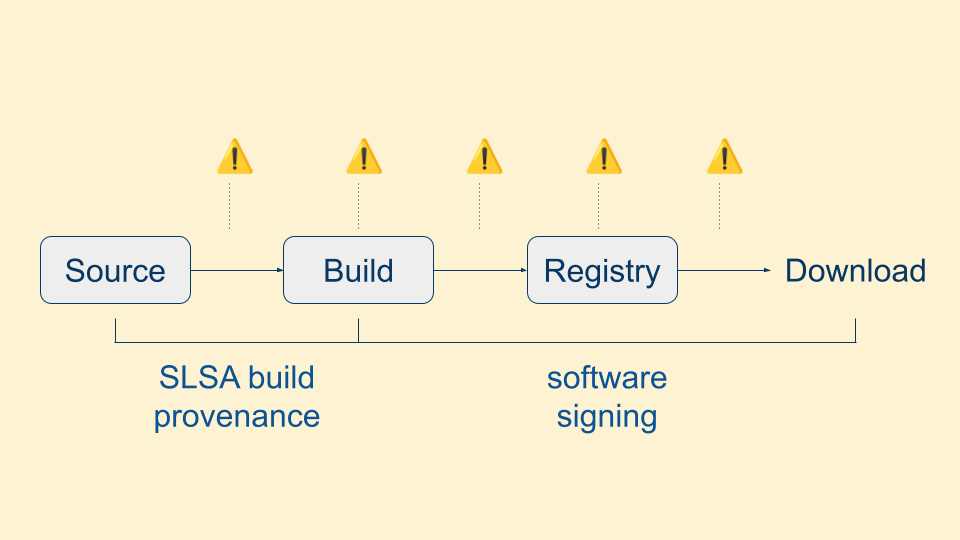

Defending package repositories is a subset of the larger problem of end-to-end software supply chain security. Another technical initiative of the OpenSSF is SLSA, which catalogs the entire process of software development and the attacks that can occur at each step. But for package repositories, we can scope this problem to the latter part of this process. This is the second important caveat: we aren't solving all software supply chain problems here, but we are helping make progress on part of the problem.

We're going to be using two techniques: one that's been around for awhile and one that is newer. Signing software to protect its integrity has been around for decades. If the package is tampered with, the signature will fail to verify. We're adding to that SLSA build provenance, which provides non-falsifiable links back to the exact source code, build process, and build logs that were used to produce the package.

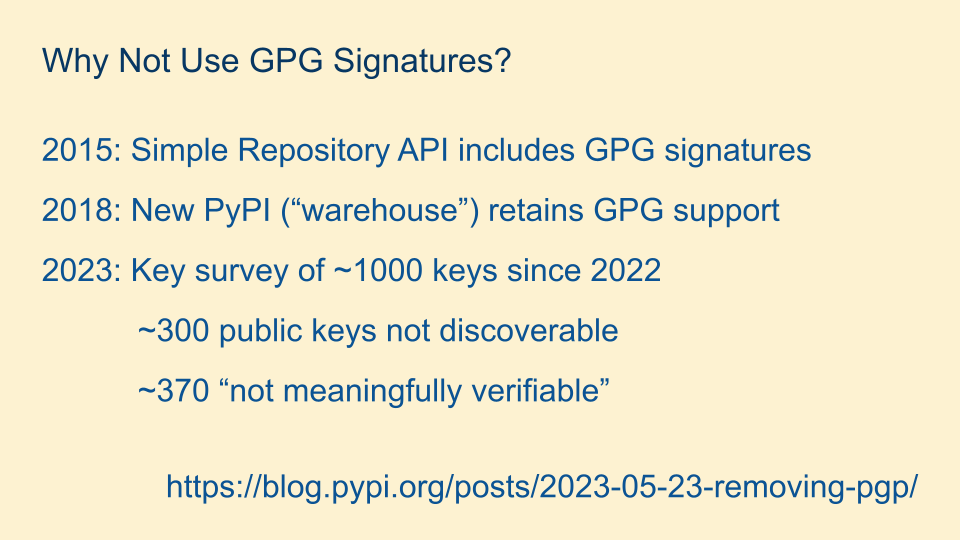

Since the practice of signing software isn't new, why not use an existing solution like GPG signatures? This is exactly what PyPI did, 10 years ago. Then two years ago, they did a survey and discovered that not many packages were being signed, and worse, many of those that were signed were not verifiable.

GPG has two "key" problems; literally how difficult it is for individual maintainers to securely manage the private signing key as well as distribute the public key needed to verify the signature. Because of these problems, PyPI was looking for a better solution to provide signatures for their packages.

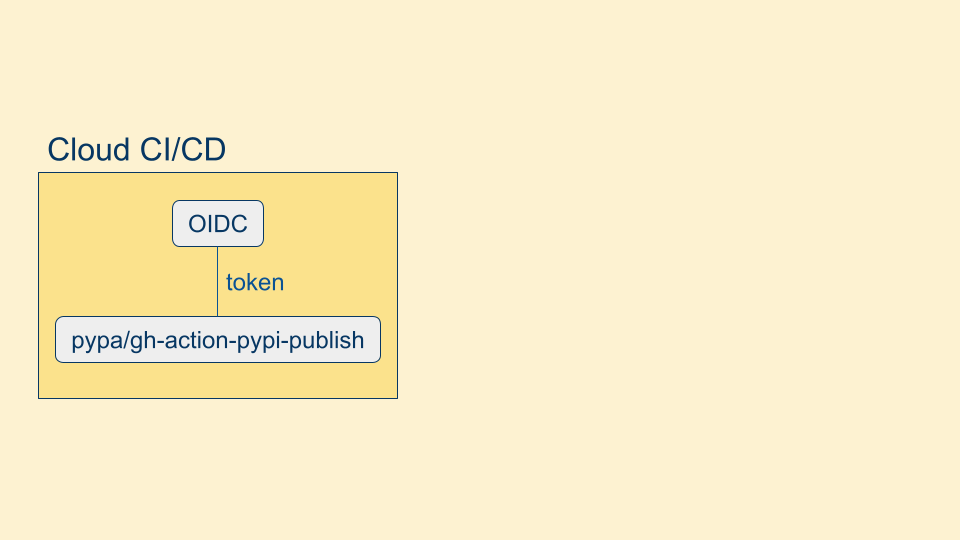

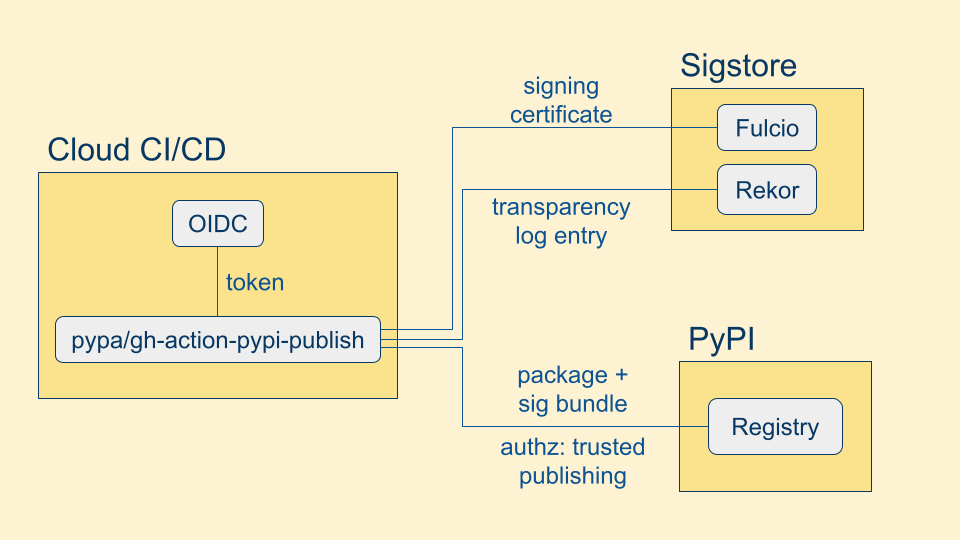

This new capability came about as cloud build systems added the ability to request workload identity via a signed OIDC token. This token contains the SLSA build provenance properties we care about, and even though the token is available to the user-controlled build process, since it's signed by the build platform it can't be tampered with or the signature will fail to verify.

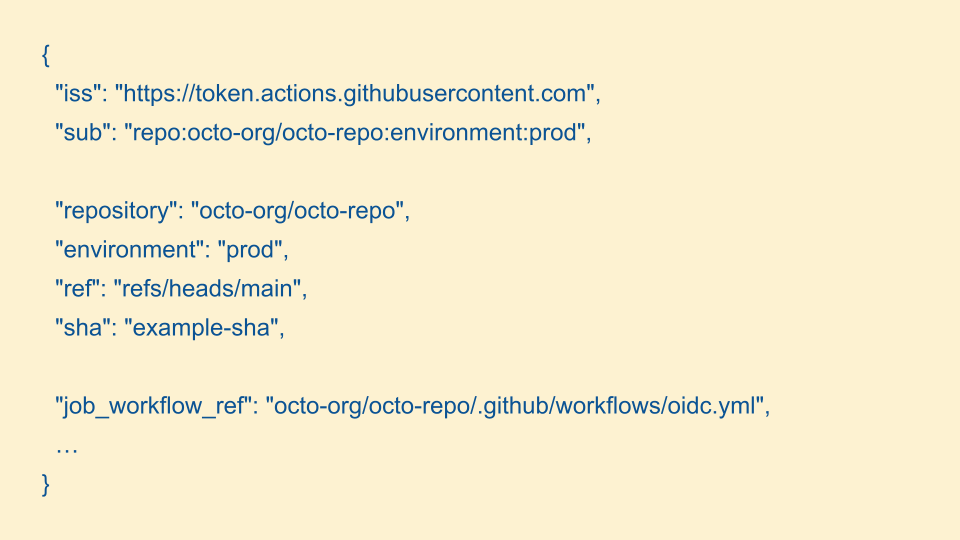

Here's an exerpt of what that OIDC token looks like in GitHub Actions. We have the build platform from the issuer, the exact repository and owner of that repository, the SHA of the repository when the build took place, and a link to the exact build instructions.

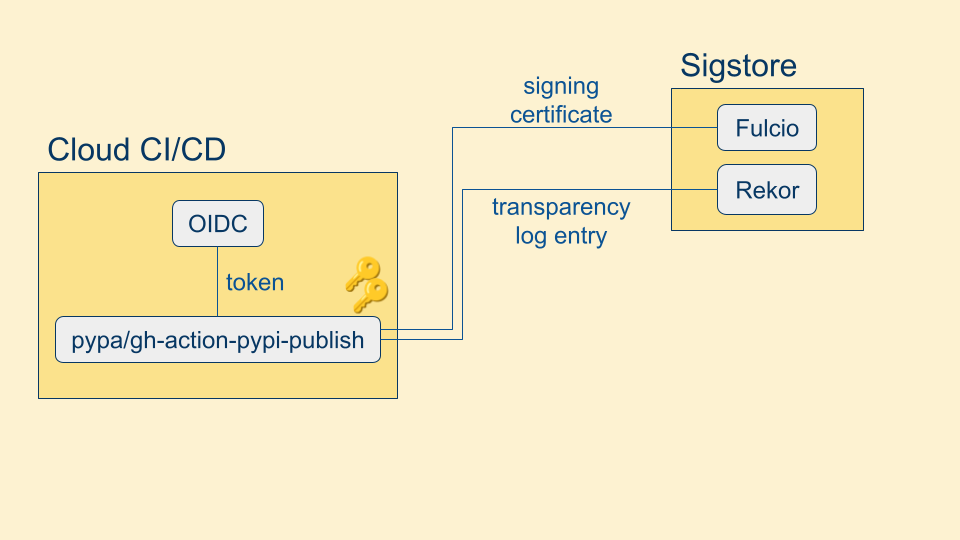

Once we have the OIDC token, we provision a public-private keypair to sign our build. We sign the build with the private key and then we do something unconventional - we throw the private key away! Since our signing keys are ephemeral, we don't have to worry about securely storing the private key.

We then send the OIDC token and the public key to the open source project and public good service Sigstore, specifically Sigstore Fulcio. Fulcio verifies the OIDC token with the issuer and then returns a X.509 signing certificate with the properties of the OIDC token included as extensions to the certificate. We also send our signature to Sigstore Rekor, a public, append-only transparency log. When we go to verify a Sigstore signature, a transparency log entry is required. This ensures that you can monitor when your workload identity is being used to sign content.

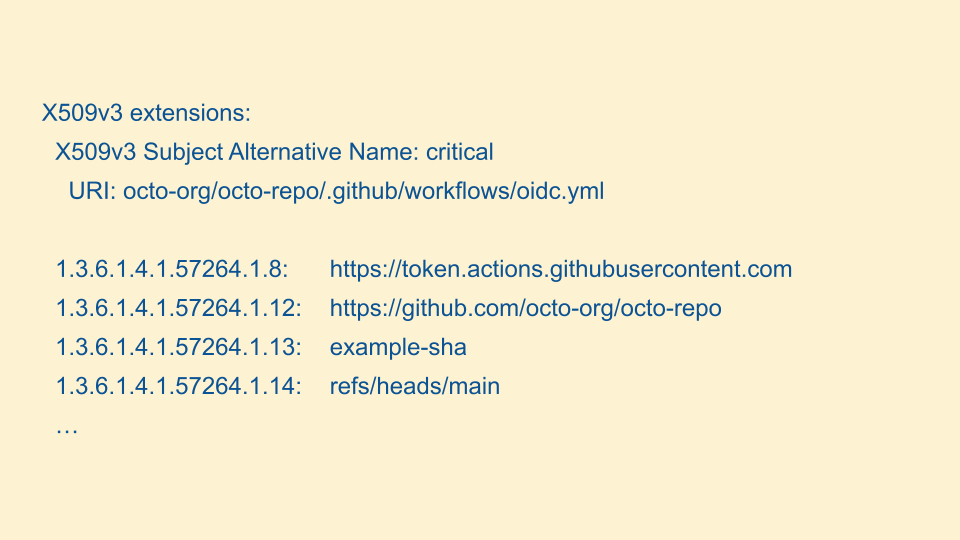

Here's an example of what the X.509 certificate extensions look like. We have the same properties as the OIDC token, stored in Sigstore-specific OIDs.

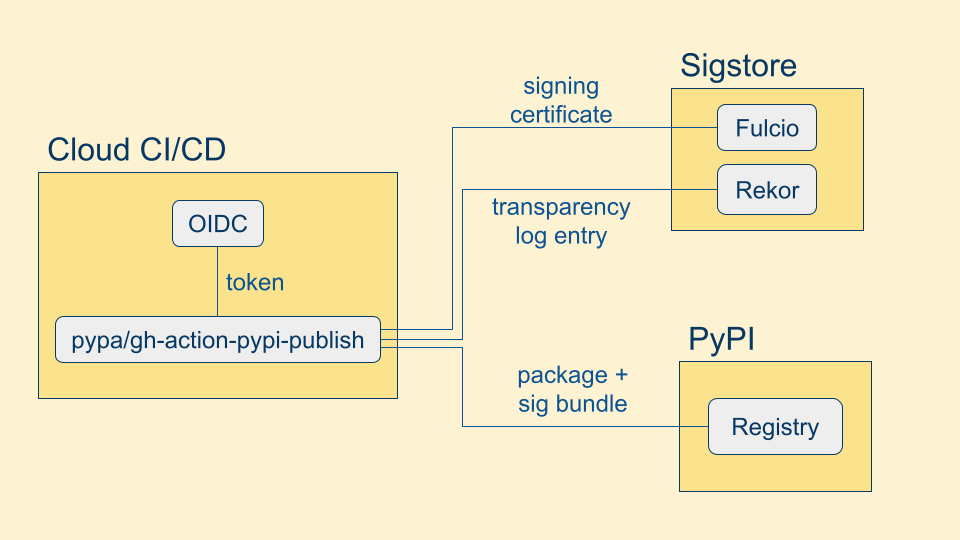

We are finally ready to upload our package! We assemble a Sigstore "bundle" which includes the signature, the X.509 signing certificate, and the transparency log entry.

...but actually there's one more step. We need to authorize our publish to the registry. In the past, this was done by storing a long-lived API key in your build pipeline. But attackers know this, and so they attack build pipelines specifically to extract the API key for later use.

Instead we can tell PyPI ahead of time that we expect to be publishing from this cloud build provider, from this repository, and this build workflow, setting up a trust relationship. Then we can request another OIDC token from the build platform, and use that to authroize our publish. This is known as trusted publishing.

Admittedly this process is a bit complex, but for good reason. By distributing the responsibilities across multiple parties, we make it much harder for a compromise of one system to be able to publish malicious content.

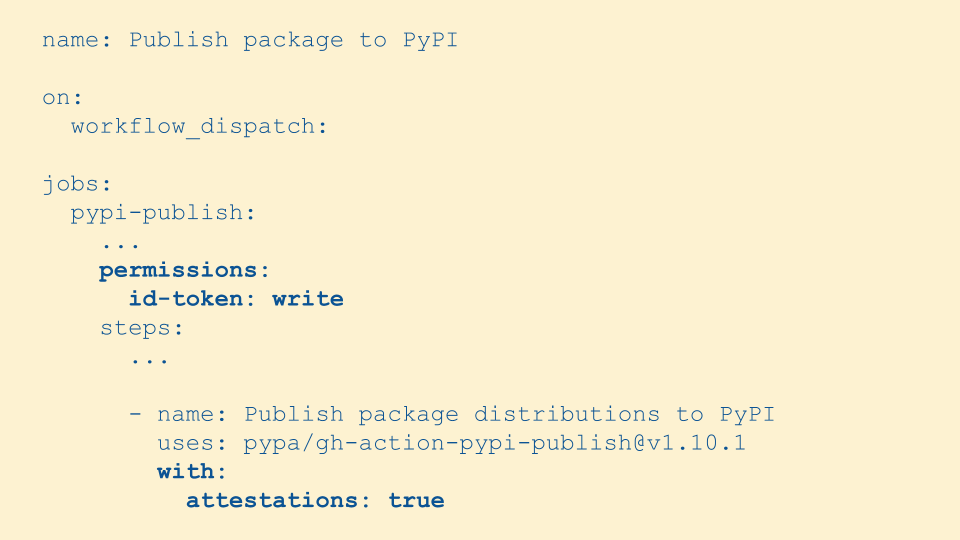

Even though the dataflow diagram is complex, we worked to make it as easy as possible for maintainers to use. Here we have an example of a GitHub Actions workflow that's building a Python package. To publish with build provenance we add a permission to request the OIDC token and we tell the publish step that we want to use attestations.

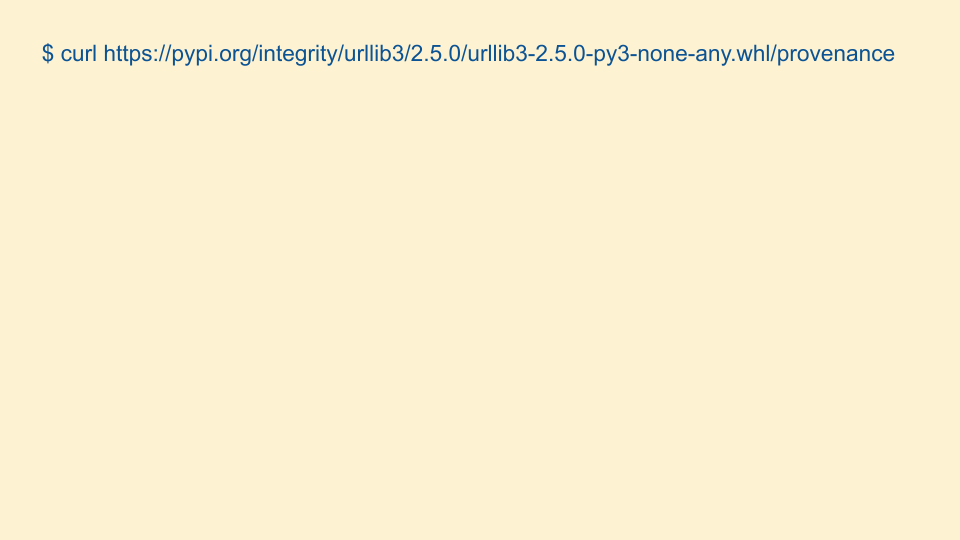

That's on the publish side, on the consuming side PyPI added an integrity API. You supply it with the package, version, and file in the package and it returns the associated Sigstore bundle.

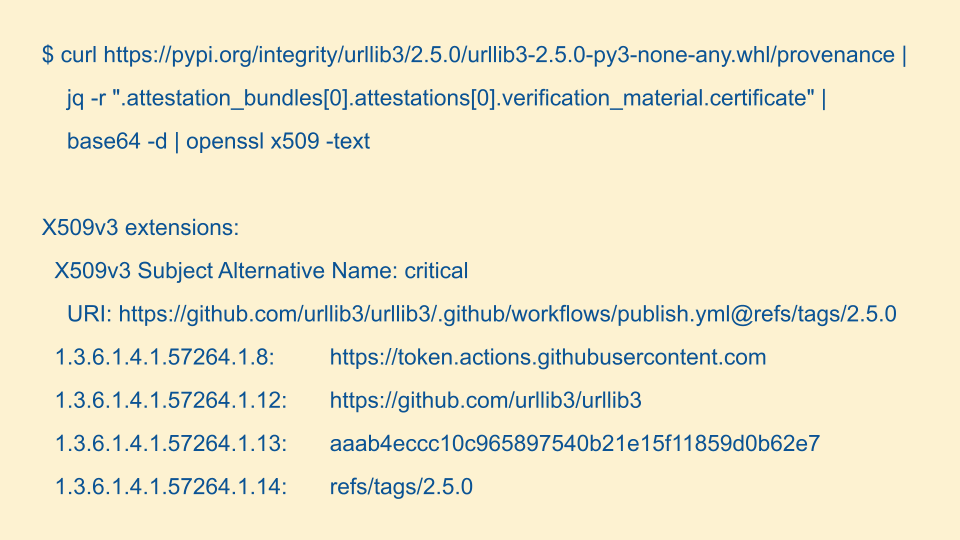

From that bundle we can query for the certificate, base64 decode it, and pass it to openssl to print out the result. You can see the actual production build provenance properties for this published package.

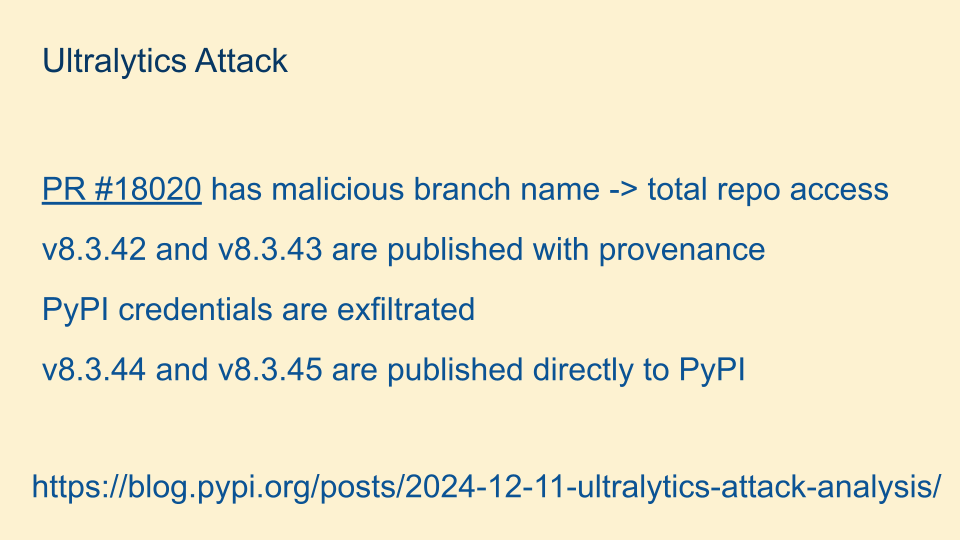

PyPI released provenance in mid 2024, and in December 2024 there was an attack on the popular Ultralytics package. Ultralytics was using GitHub Actions in a way that was subject to an injection attack via the branch name, giving the attacker full control of the GitHub repository. The attacker used this access to publish two packages from GitHub Actions containing malicious code, as well as exfiltrating the PyPI publish credentials.

When the attacker's access to GitHub was removed, they published another two packages with malicious code directly to PyPI.

Because Ultralytics was using provenance, when the community noticed the malicious branch name and unexpected publish they were able to quickly investigate and determine what happened. This brings us to important caveat number three: just because a package has provenance does not mean the package is secure. Anyone can create an account on GitHub and publish to PyPI. But by following the non-falsifiable links back to the source code and build instructions, you can make a more informed decision about the trustworthiness of a package.

Additionally, if a package was publishing with provenance and then stops, that doesn't mean the package is insecure, but it might be worth double-checking that it was intentional.

The Ultralytics package has since removed their long-lived PyPI API key from their build pipeline, so that in the future if their repository is compromised they only need to regain control of the repository and not also roll credentials in PyPI.

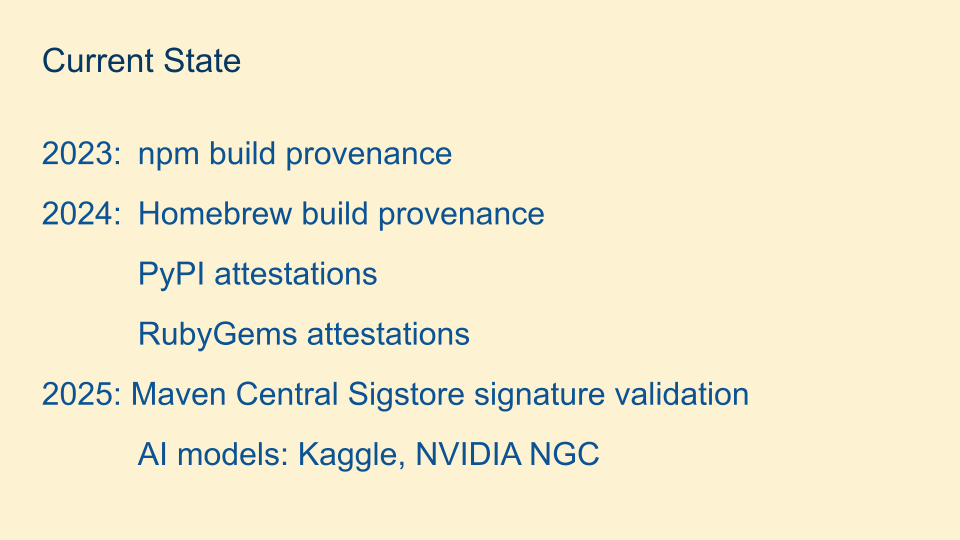

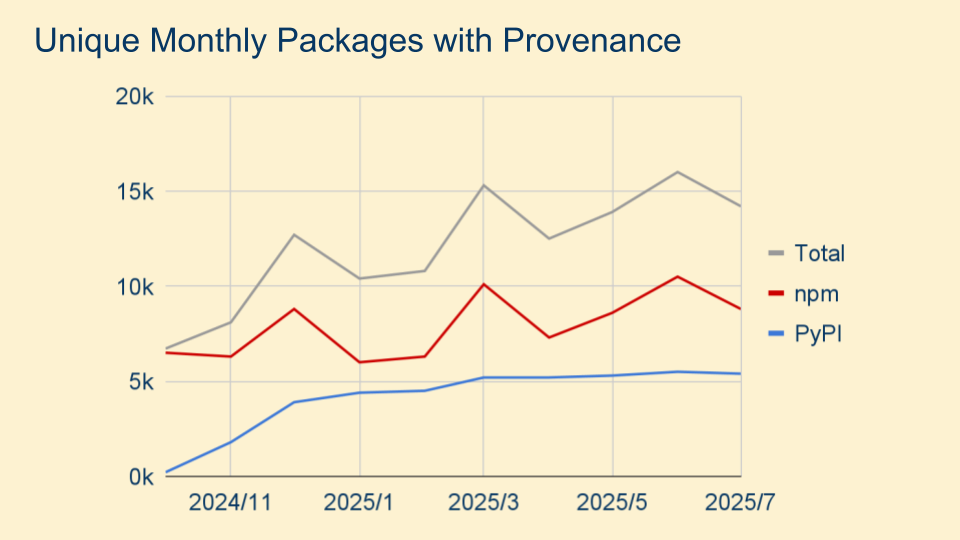

Build provenance has proved quite popular with registries that are looking for an easy way to help their users sign packages. Last year we saw several ecosystems release a build provenance feature, and this year we've seen ML/LLM model registries also make use of provenance to prevent tampering of the models users are downloading and using.

Build provenance is also quite popular with maintainers, especially when combined with trusted publishing. It might not seem like a big deal to manage long-lived credentials in one project, but some maintainers are handling dozens, or even hundreds of projects. Not having to manage secrets makes maintainer's lives easier.

There are so many different directions to explore with this work. There are many additional ecosystems where people are downloading software that could make use of the signing and build provenance Sigstore provides. We're excited to work with model regestries and explore other potential partnerships.

We'd like to continue adding supported cloud build platforms; you can see Fulcio's requirements for a new identity provider.

And of course build provenance is just one of many things you could attest to. The SLSA project is working on source provenance to answer questions around how code landed in a repository - was it reviewed, did unit tests pass, and / or other linters.

These attestations, and many more, come from the in-toto project; presented at USENIX Security 2019. You can check out the different attestation predicate types for other ideas of what can be attested in the future.